What's That Noise?! [Ian Kallen's Weblog]

Wednesday December 10, 2008

Wednesday December 10, 2008

Cloud Hype, An Amazon Web Services Post-Mortem

In the last few years, the scope of Amazon Web Services (AWS) has broadened to cover a range of infrastructure capabilities and has emerged as a game changer. The hype around AWS isn't all wrong, a whole ecosystem of tools and services has developed around AWS that makes the offering compelling. However, the hype isn't all right either. At Technorati, we used AWS this year to develop and put in production a new crawler and a system that produces the web page screenshot thumbnails now seen on search result pages. But now that that chapter is coming to a close, it's time to retrospect.

In the last few years, the scope of Amazon Web Services (AWS) has broadened to cover a range of infrastructure capabilities and has emerged as a game changer. The hype around AWS isn't all wrong, a whole ecosystem of tools and services has developed around AWS that makes the offering compelling. However, the hype isn't all right either. At Technorati, we used AWS this year to develop and put in production a new crawler and a system that produces the web page screenshot thumbnails now seen on search result pages. But now that that chapter is coming to a close, it's time to retrospect.

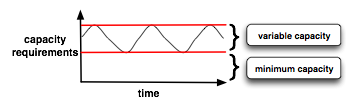

There's a prevailing myth that using the elasticity of EC2 makes it cheaper to operate than fixed assets. The theory is that by shutting down unneeded infrastructure during the lulls, you're saving money. In a purely fixed infrastructure model, Technorati's data aquisition systems must be provisioned for their maximum utilization capacity threshold. When utilization ebbs, a lot of that infrastructure sits relatively idle. That much is true but the reality is that flexible capacity is only saving money relative to the minimum requirements. So the theory only holds if your variability is high compared to your minimum. That is, if the difference between your minimum and maximum capacity is large or you're not operating a 365/7/24 system but episodically using a lot of infrastructure and then shutting it down. Neither is true for us. The normal operating mode of Technorati's data acquisition systems follows the ebb and flow of the blogosphere, which varies a lot but is always on. The sketch to the left shows the minimum capacity and the variable capacity distinguished.

There's a prevailing myth that using the elasticity of EC2 makes it cheaper to operate than fixed assets. The theory is that by shutting down unneeded infrastructure during the lulls, you're saving money. In a purely fixed infrastructure model, Technorati's data aquisition systems must be provisioned for their maximum utilization capacity threshold. When utilization ebbs, a lot of that infrastructure sits relatively idle. That much is true but the reality is that flexible capacity is only saving money relative to the minimum requirements. So the theory only holds if your variability is high compared to your minimum. That is, if the difference between your minimum and maximum capacity is large or you're not operating a 365/7/24 system but episodically using a lot of infrastructure and then shutting it down. Neither is true for us. The normal operating mode of Technorati's data acquisition systems follows the ebb and flow of the blogosphere, which varies a lot but is always on. The sketch to the left shows the minimum capacity and the variable capacity distinguished.

In response to some of the fallacies posted on an O'Reilly blog the other day (by George Reese), On Why I Don't Like Auto-Scaling in the Cloud, Don MacAskill from SmugMug wrote a really great post yesterday about his SkyNet system, On Why Auto-Scaling in the Cloud Rocks. Don also emphasizes SmugMugs modest requirements for operations staff. In an application with sufficient simplicity and automation around it, it's easy to imagine a 365/7/24 service having meager ops burdens. I think we should surmise that the cost of operating SmugMug with autonomic de/provisioning works because it fits their operating model. I understand Reese's concern, that folks may not do the hard work of really understanding their capacity requirements if they're too coddled by automation. However, that concern comes off as a shill for John Allspaw's capacity planning book (which I'm sure is great, can't wait to read it). Bryan Duxbury from RapLeaf describes their use of AWS and how the numbers work out in his post, Rent or Own: Amazon EC2 vs. Colocation Comparison for Hadoop Clusters. Since the target is to serve a Hadoop infrastructure, AWS must get a thumbs down in their case. Hadoop's performance is impaired by poor rack locality and the latencies of Amazon's I/O systems clearly drags it down. If you're going to be running Hadoop on a continuous basis, use your own racks, with your own switches and your own disk spindles.

At Technorati, we're migrating the crawl infrastructure from AWS to our colo. While I love the flexibility that AWS provides and it's been great using it as a platform to ramp up on , the bottom line is that Technorati has a pre-existing investment in machines, racks and colo infrastructure. As much as I'd like our colo infrastructure to operate with lower labor and communication overhead, running on AWS has amounted to additional costs that we must curtail.

Cloud computing (or utility computing or flex computing or whatever its called) is a game changer. So when do I recommend you use AWS? Ideally: anytime. If your application is architected to expand and contract its footprint with the demands put upon it, provision your minimum capcacity requirements in your colo and use AWS to "burst" when your load demands it. Another case where using AWS is a big win is for a total green field. If you don't have a colo, are still determining the operating charactersics of your applications and need machines provisioned, AWS is an incredible resource. However, I think the flexibility vs. economy imperatives will always lead you to optimize your costs by provisioning your minimum capacity in infrastructure that you own and operate.

There's also another option: instead of buying and operating your own machines and racks, you may be able to optimize costs by renting machines provisioned to your specs in a contract from the services that have established themselves in that market (Rackspace, Server Beach, ServePath, LayeredTech, etc). Ultimately, I'm looking forward to the emergence of a compute market place where the decisions to incur capital expense, rent by the hour or rent under a contract will be easier to traverse.

amazon web services aws cloud computing rapleaf technorati smugmug hadoop oreilly data centers capacity planning

( Dec 10 2008, 11:53:19 PM PST ) PermalinkComments [2]

![Validate my RSS feed [Valid RSS]](/images/valid-rss.png)